Mobile Deep Learning Narratives

Adobe Research

Context of Project: I undertook this research project in Summer 2019 as a Deep Learning Research Intern in Adobe Research's Creative Intelligence Lab. I was selected as a 2019 Adobe Women-in-Research Scholar and given the chance to work with top researchers at Adobe as an undergraduate. This project was advised by research mentors (and Adobe researchers), Fabian Caba Heilbron and Joon-Young Lee.

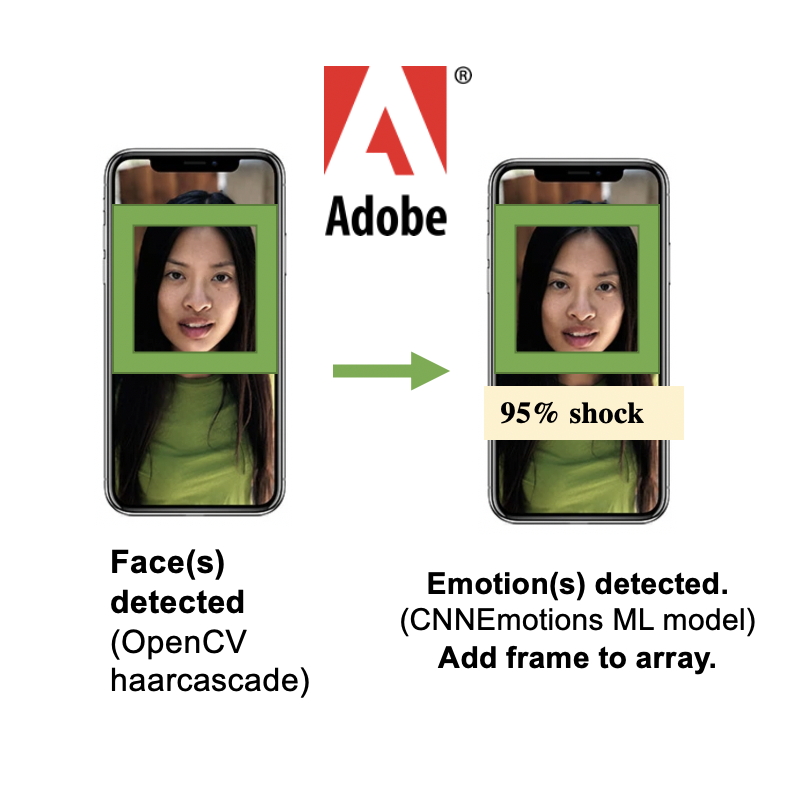

Project Synopsis: With the rise of large volumes of video on phones, it has become difficult to parse through all of the video and know what moments are most significant or eye-catching to people. We wondered how deep learning models could aid this problem and how one could build an intelligent video editor on mobile. We intervened in this problem by first asking - how does a deep learning model even work on mobile? How can we combine a series of models on a mobile app? Because I was only at Adobe for 3 months, I took on a smaller research project to answer these initial exploratory research questions. I created an app that captures video for 5 seconds, parses through the frames, and selects the highest emotion moments for 6 emotions of interest: anger, disgust, fear, happiness, neutrality, sadness, shock.

My Role:

- Researched how deep learning could be used to generate digital video narratives on mobile

- Developed an iOS app in Objective-C that employs deep learning models and tools (CoreML, OpenCV, MobileNetV2, EmotionNet) to choose high emotion moments given a 5 second live video stream.

- Presented research findings to Adobe researchers and product teams

Technologies Used: Objective-C, XCode, CoreML, MobileNetV2, EmotionNet